I wanted to learn how machine learning is used to classify images (Image recognition). I was browsing Kaggle's past competitions and I found Dogs Vs Cats: Image Classification Competition (Here one needs to classify whether image contain either a dog or a cat). Google search helped me to get started. Here are some of the references that I found quite useful: Yhat's Image Classification in Python and SciKit-image Tutorial. Data is available here. Here I am using first 501 dog images and first 501 cat images from train data folder. For testing I selected first 100 images from test data folder and manually labeled image for verifying.

##########################################

# View files in the directory

ls

Out:

Image_Classification.ipynb data/

# View files in the data directory

ls data

Out:

data/ test/ train/

# Import necessary libraries

import pandas as pd

import numpy as np

from skim age import io

from matplotlib import pyplot as plt

# Define location of data

import os

train_directory = "./data/train/"

test_directory = "./data/test/"

# Define a function to return a list containing the names of the files in a directory given by path

def images(image_directory):

return [image_directory+image for image in os.listdir(image_directory)]

images(train_directory)

Out:

In training directory, image filename indicates image label as cat or dog: Need to extract labels

## Extracting training image labels

train_image_names = images(train_directory)

# Function to extract labels

def extract_labels(file_names):

'''Create labels from file names: Cat = 0 and Dog = 1'''

# Create empty vector of length = no. of files, filled with zeros

n = len(file_names)

y = np.zeros(n, dtype = np.int32)

# Enumerate gives index

for i, filename in enumerate(file_names):

# If 'cat' string is in file name assign '0'

if 'cat' in str(filename):

y[i] = 0

else:

y[i] = 1

return y

extract_labels(train_image_names)

Out:

array([0, 0, 0, ..., 1, 1, 1], dtype=int32)

# Save labels

y = extract_labels(train_image_names)

# Save labels: np.save(file or string, array)

np.save('y', y)

# Images in test directory

images(test_directory)

Out:

## View image: Dog

# from skimage import io # (imported earlier)

temp = io.imread('./data/train/dog.20.jpg')

plt.imshow(temp)

Out:

## View image: Cat

# from skimage import io # (imported earlier)

temp = io.imread('./data/train/cat.4.jpg')

plt.imshow(temp)

Out:

# PCA on training data

pca = PCA(n_components = 2)

X = pca.fit_transform(data)

X.size

Out: 20040

X[:, 0[.size

Out: 1002

X[:, 1[.size

Out: 1002

# Create a dataframe

df = pd.DataFrame({"x-1": X[:, 0], "x-2": X[:, 1], "label" : np.where(y == 1, "Dog", "Cat")})

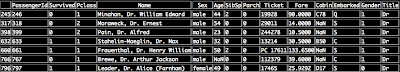

df

Out:

# Create a dataframe

np.sum(pca.explained_variance_ratio_)

Out: 0.6461222455062432

Here 2-Dimension PCA, captures 64.6% of the variation

Out: (100, 420000)

# Transforming test data

testX = pca.fit_transform(test)

testX.shape[1]

Out: 20

## Logistic regression

from sklearn.linear_model import LogisticRegression

clf = LogisticRegression( )

logreg = clf.fit(X, y)

# Predict using Logistic Regression

y_predict_logreg = logreg.predict(testX)

y_predict_logreg

Out:

## Logistic Regression: Accuracy

# Load 'Actual' labels for test data

actual = pd.read_csv('ActualLabels.csv')

actual['Labels'].head( )

Out:

logreg_accuracy =

np.where(y_predict_logreg == actual['Labels'], 1, 0).sum()/float(len(actual))

logreg_accuracy

Out: 0.54

54% of the images were correctly classified using logistic regression (2D PCA)

## KNN classifier

from sklearn.neighbors import KNeighborsClassifier

knn = KNeighborsClassifier()

knn.fit(X, y)

Out: KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_neighbors=5, p=2, weights='uniform')

y_predict_knn

Out:

## KNN: Accuracy

knn_accuracy = np.where(y_predict_knn == actual['Labels'], 1, 0).sum()/float(len(actual))

knn_accuracy

Out: 0.52

##########################################

# View files in the directory

ls

Out:

Image_Classification.ipynb data/

# View files in the data directory

ls data

Out:

data/ test/ train/

# Import necessary libraries

import pandas as pd

import numpy as np

from skim age import io

from matplotlib import pyplot as plt

# Define location of data

import os

train_directory = "./data/train/"

test_directory = "./data/test/"

def images(image_directory):

return [image_directory+image for image in os.listdir(image_directory)]

images(train_directory)

Out:

## Extracting training image labels

train_image_names = images(train_directory)

# Function to extract labels

def extract_labels(file_names):

'''Create labels from file names: Cat = 0 and Dog = 1'''

# Create empty vector of length = no. of files, filled with zeros

n = len(file_names)

y = np.zeros(n, dtype = np.int32)

# Enumerate gives index

for i, filename in enumerate(file_names):

# If 'cat' string is in file name assign '0'

if 'cat' in str(filename):

y[i] = 0

else:

y[i] = 1

return y

extract_labels(train_image_names)

Out:

array([0, 0, 0, ..., 1, 1, 1], dtype=int32)

# Save labels

y = extract_labels(train_image_names)

# Save labels: np.save(file or string, array)

np.save('y', y)

# Images in test directory

images(test_directory)

Out:

## View image: Dog

# from skimage import io # (imported earlier)

temp = io.imread('./data/train/dog.20.jpg')

plt.imshow(temp)

Out:

## View image: Cat

# from skimage import io # (imported earlier)

temp = io.imread('./data/train/cat.4.jpg')

plt.imshow(temp)

Out:

Using folder sort I found that Images are of different sizes: (max size = cat.835.jpg, min size = cat.4821.jpg). Need a standard size for analysis

# Get size of images (Ref: stackoverflow)

image_size = [ ]

for i in train_image_names: # images(file_directory)

im = Image.open(i)

image_size.append(im.size) # A list with tuples: [(x, y), …]

# Get mean of image size (Ref: stackoverflow)

[sum(y) / len(y) for y in zip(*image_size)]

Out: [403, 358]

Transforming the image: Standard size = (400, 350)

## Transforming the image

# Set up a standard image size based on approximate mean size

STANDARD_SIZE = (400, 350)

Code below copied from: Yhat's Image Classification in Python

# Function to read image, change image size and transform image to matrix

def img_to_matrix(filename, verbose=False):

'''

takes a filename and turns it into a numpy array of RGB pixels

'''

img = Image.open(filename)

# img = Image.fromarray(filename)

if verbose == True:

print "Changing size from %s to %s" % (str(img.size), str(STANDARD_SIZE))

img = img.resize(STANDARD_SIZE)

img = list(img.getdata())

img = map(list, img)

img = np.array(img)

return img

# Function to flatten numpy array

def flatten_image(img):

'''

takes in an (m, n) numpy array and flattens it

into an array of shape (1, m * n)

'''

s = img.shape[0] * img.shape[1]

img_wide = img.reshape(1, s)

return img_wide[0]

## Prepare training data

data = []

for i in images(train_directory):

img = img_to_matrix(i)

img = flatten_image(img)

data.append(img)

data = np.array(data)

data.shape

Out: (1002, 420000)

data[1].shape

Out: (420000, )

Total 420,000 features per image. 420,000 features is a lot to deal with for many algorithms, so the number of dimensions should be reduced somehow. For this we can use an unsupervised learning technique called PCA to derive a smaller number of features from the raw pixel data. Principal Component Analysis (PCA): to identify patterns to reduce dimensions of the dataset with minimum loss of information.

# Import PCA

from sklearn.decomposition import PCA# PCA on training data

pca = PCA(n_components = 2)

X = pca.fit_transform(data)

X.size

Out: 20040

X[:, 0[.size

Out: 1002

X[:, 1[.size

Out: 1002

# Create a dataframe

df = pd.DataFrame({"x-1": X[:, 0], "x-2": X[:, 1], "label" : np.where(y == 1, "Dog", "Cat")})

df

Out:

# Create a dataframe

np.sum(pca.explained_variance_ratio_)

Out: 0.6461222455062432

Here 2-Dimension PCA, captures 64.6% of the variation

## Prepare testing data: PCA

test_images = images(test_directory)

test = [ ]

for i in test_images:

img = img_to_matrix(i)

img = flatten_image(img)

test.append(img)

test = np.array(test)

test.shape

Out: (100, 420000)

# Transforming test data

testX = pca.fit_transform(test)

testX.shape[1]

Out: 20

## Logistic regression

from sklearn.linear_model import LogisticRegression

clf = LogisticRegression( )

logreg = clf.fit(X, y)

# Predict using Logistic Regression

y_predict_logreg = logreg.predict(testX)

y_predict_logreg

Out:

# Load 'Actual' labels for test data

actual = pd.read_csv('ActualLabels.csv')

actual['Labels'].head( )

Out:

logreg_accuracy =

np.where(y_predict_logreg == actual['Labels'], 1, 0).sum()/float(len(actual))

Out: 0.54

54% of the images were correctly classified using logistic regression (2D PCA)

## KNN classifier

from sklearn.neighbors import KNeighborsClassifier

knn = KNeighborsClassifier()

knn.fit(X, y)

Out: KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_neighbors=5, p=2, weights='uniform')

# Predict using KNN classifier

y_predict_knn = knn.predict(testX)y_predict_knn

Out:

## KNN: Accuracy

knn_accuracy = np.where(y_predict_knn == actual['Labels'], 1, 0).sum()/float(len(actual))

knn_accuracy

Out: 0.52

52% of the images were correctly classified using KNN (2D PCA)

More sophisticated approaches, for example Support Vector Machines, Neural Networks and Others, would classify images with higher accuracy .